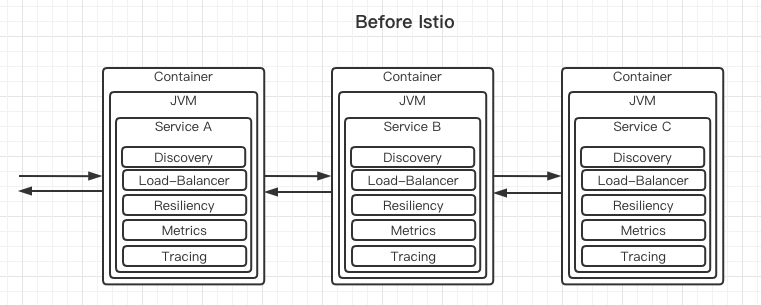

Understand Microservices architecture requirements and challenges resource

API

Discovery

Invocation

Elasticity

Resillience

Pipeline

Authentication

Logging

Monitoring

Tracing

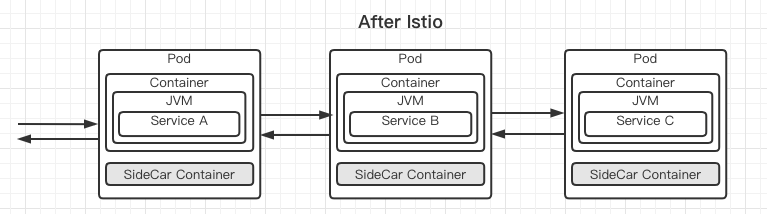

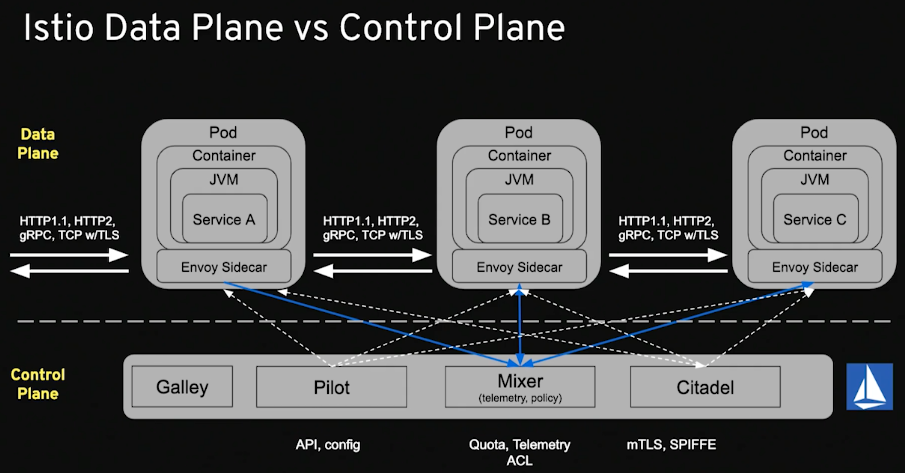

The sidecar intercepts all network traffic.

How to add an Istio-Proxy(sidecar)? istioctl kube-inject -f NormalDeployment.yaml

or

kubectl label namespace myspace istio-injection=enabled

To “see” the sidecar:

kubectl describe deployment customer

Envoy is the current sidecar.

Next Generation Microservice - Service Mesh

Install Istio https://github.com/redhat-developer-demos/istio-tutorial

https://github.com/burrsutter/scripts-istio

1 2 3 4 5 6 7 8 9 export MINIKUBE_HOME=/Users/lixiangliu/Projects/k8s/minikube-istioexport PATH=$MINIKUBE_HOME /bin:$PATH export KUBECONFIG=$MINIKUBE_HOME /.kube/configexport KUBE_EDITOR="code -w" export ISTIO_HOME=$MINIKUBE_HOME /istio-1.6.5export PATH=$ISTIO_HOME /bin:$PATH cd $MINIKUBE_HOME

Start:

1 2 3 4 % minikube start -p istio-mk --memory=8192 --cpus=3 \ --kubernetes-version=v1.18.3 \ --vm-driver=virtualbox \ --disk-size=30g

install istio

1 2 3 4 5 6 % curl -L https://github.com/istio/istio/releases/download/1.6.5/istio-1.6.5-osx.tar.gz | tar xz % cd istio-1.6.5 % export PATH=$ISTIO_HOME /bin:$PATH % istioctl manifest apply --set profile=demo --set values.global.proxy.privileged=true % kubectl config set -context $(kubectl config current-context) --namespace=istio-system % kubectl get pods -w

the outputs is :

1 2 3 4 5 6 7 8 NAME READY STATUS RESTARTS AGE grafana-b54bb57b9-j6wc8 1/1 Running 0 9m49s istio-egressgateway-7486cf8c97-kdffx 1/1 Running 0 9m50s istio-ingressgateway-6bcb9d7bbf-fdkv5 1/1 Running 0 9m50s istio-tracing-9dd6c4f7c-wpshx 1/1 Running 0 9m49s istiod-788f76c8fc-5smwm 1/1 Running 0 10m kiali-d45468dc4-p7tb9 1/1 Running 0 9m49s prometheus-6477cfb669-stbh8 2/2 Running 0 9m49s

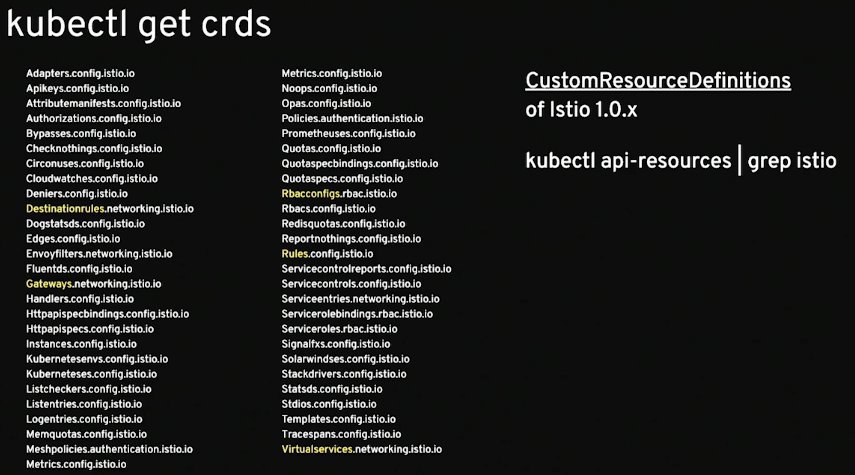

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 % kubectl get crds NAME CREATED AT adapters.config.istio.io 2020-07-22T23:25:58Z attributemanifests.config.istio.io 2020-07-22T23:25:58Z authorizationpolicies.security.istio.io 2020-07-22T23:25:58Z clusterrbacconfigs.rbac.istio.io 2020-07-22T23:25:58Z destinationrules.networking.istio.io 2020-07-22T23:25:58Z envoyfilters.networking.istio.io 2020-07-22T23:25:58Z gateways.networking.istio.io 2020-07-22T23:25:58Z handlers.config.istio.io 2020-07-22T23:25:58Z httpapispecbindings.config.istio.io 2020-07-22T23:25:58Z httpapispecs.config.istio.io 2020-07-22T23:25:58Z instances.config.istio.io 2020-07-22T23:25:58Z istiooperators.install.istio.io 2020-07-22T23:25:58Z peerauthentications.security.istio.io 2020-07-22T23:25:58Z quotaspecbindings.config.istio.io 2020-07-22T23:25:58Z quotaspecs.config.istio.io 2020-07-22T23:25:58Z rbacconfigs.rbac.istio.io 2020-07-22T23:25:58Z requestauthentications.security.istio.io 2020-07-22T23:25:58Z rules.config.istio.io 2020-07-22T23:25:58Z serviceentries.networking.istio.io 2020-07-22T23:25:58Z servicerolebindings.rbac.istio.io 2020-07-22T23:25:58Z serviceroles.rbac.istio.io 2020-07-22T23:25:58Z sidecars.networking.istio.io 2020-07-22T23:25:58Z templates.config.istio.io 2020-07-22T23:25:58Z virtualservices.networking.istio.io 2020-07-22T23:25:58Z workloadentries.networking.istio.io 2020-07-22T23:25:58Z

Deploy with Istio/Envoy Sidecars 1 2 3 4 5 6 7 8 9 10 11 12 13 % kubectl config current-context % minikube --profile istio-mk ip 192.168.99.102 % minikube --profile istio-mk status % kubectl create namespace istio-demo % kubectl config set -context $(kubectl config current-context) --namespace=istio-demo % kubens default istio-demo istio-system kube-node-lease kube-public kube-system

Let’s clone https://github.com/redhat-developer-demos/istio-tutorial

Manual inject We have a deployment file .

We use istioctl to inject sidecar:

1 istioctl kube-inject -f customer/kubernetes/Deployment.yml

You can see some sidecar is injected.

Automatic inject 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 % kubectl get namespace --show-labels NAME STATUS AGE LABELS default Active 47h <none> istio-demo Active 15m <none> istio-system Active 47m istio-injection=disabled kube-node-lease Active 47h <none> kube-public Active 47h <none> kube-system Active 47h <none> % kubectl label namespace istio-demo istio-injection=enabled % kubectl get namespace --show-labels NAME STATUS AGE LABELS default Active 47h <none> istio-demo Active 17m istio-injection=enabled istio-system Active 48m istio-injection=disabled kube-node-lease Active 47h <none> kube-public Active 47h <none> kube-system Active 47h <none>

Alse, we notice the yaml has an annotation sidecar.istio.io/inject: "true", that’s the different with the normal yaml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 apiVersion: apps/v1 kind: Deployment metadata: labels: app: customer version: v1 name: customer spec: replicas: 1 selector: matchLabels: app: customer version: v1 template: metadata: labels: app: customer version: v1 annotations: sidecar.istio.io/inject: "true" spec: containers: - env: - name: JAVA_OPTIONS value: -Xms15m -Xmx15m -Xmn15m name: customer image: quay.io/rhdevelopers/istio-tutorial-customer:v1.1 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 name: http protocol: TCP - containerPort: 8778 name: jolokia protocol: TCP - containerPort: 9779 name: prometheus protocol: TCP resources: requests: memory: "20Mi" cpu: "200m" limits: memory: "40Mi" cpu: "500m" livenessProbe: exec: command: - curl - localhost:8080/health/live initialDelaySeconds: 5 periodSeconds: 4 timeoutSeconds: 1 readinessProbe: exec: command: - curl - localhost:8080/health/ready initialDelaySeconds: 6 periodSeconds: 5 timeoutSeconds: 1 securityContext: privileged: false

1 2 3 4 5 % kubectl apply -f customer/kubernetes/Deployment.yml deployment.apps/customer created % kubectl get pods NAME READY STATUS RESTARTS AGE customer-76bddbf59c-7p6mr 0/2 PodInitializing 0 11s

There are two containers in the pods.

1 2 % kubectl apply -f customer/kubernetes/Service.yml service/customer created

service.yaml:

1 2 3 4 5 6 7 8 9 10 11 12 apiVersion: v1 kind: Service metadata: name: customer labels: app: customer spec: ports: - name: http port: 8080 selector: app: customer

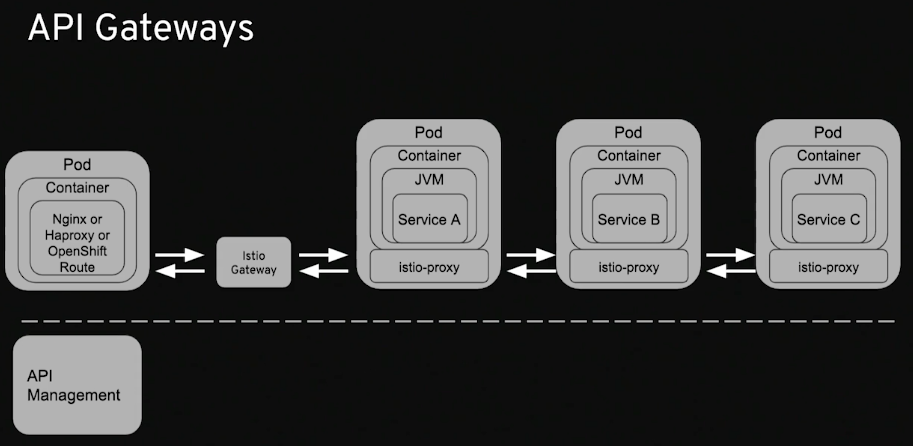

1 2 3 4 5 6 % kubectl apply -f customer/kubernetes/Gateway.yml gateway.networking.istio.io/customer-gateway created virtualservice.networking.istio.io/customer-gateway created % kubectl get vs NAME GATEWAYS HOSTS AGE customer-gateway [customer-gateway] [*] 18s

Gateway.yaml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: customer-gateway spec: selector: istio: ingressgateway servers: - port: number: 80 name: http protocol: HTTP hosts: - "*" --- apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: customer-gateway spec: hosts: - "*" gateways: - customer-gateway http: - match: - uri: prefix: /customer rewrite: uri: / route: - destination: host: customer port: number: 8080

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 % kubectl apply -f preference/kubernetes/Deployment.yml deployment.apps/preference-v1 created % kubectl apply -f preference/kubernetes/Service.yml service/preference created % kubectl apply -f recommendation/kubernetes/Deployment.yml deployment.apps/recommendation-v1 created % kubectl apply -f recommendation/kubernetes/Service.yml service/recommendation created % kubectl get services NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE customer ClusterIP 10.98.110.140 <none> 8080/TCP 6m39s preference ClusterIP 10.97.254.164 <none> 8080/TCP 32s recommendation ClusterIP 10.108.35.221 <none> 8080/TCP 6s % kubectl get vs NAME GATEWAYS HOSTS AGE customer-gateway [customer-gateway] [*] 7m36s

call trace:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 kubectl get services -n istio-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE grafana ClusterIP 10.110.100.175 <none> 3000/TCP 70m istio-egressgateway ClusterIP 10.111.112.128 <none> 80/TCP,443/TCP,15443/TCP 70m istio-ingressgateway LoadBalancer 10.108.217.161 <pending> 15020:30321/TCP,80:30350/TCP,443:30445/TCP,31400:31920/TCP,15443:31115/TCP 70m istiod ClusterIP 10.103.248.183 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP,853/TCP 70m jaeger-agent ClusterIP None <none> 5775/UDP,6831/UDP,6832/UDP 70m jaeger-collector ClusterIP 10.103.241.201 <none> 14267/TCP,14268/TCP,14250/TCP 70m jaeger-collector-headless ClusterIP None <none> 14250/TCP 70m jaeger-query ClusterIP 10.101.27.250 <none> 16686/TCP 70m kiali ClusterIP 10.111.79.49 <none> 20001/TCP 70m prometheus ClusterIP 10.104.161.153 <none> 9090/TCP 70m tracing ClusterIP 10.106.110.151 <none> 80/TCP 70m zipkin ClusterIP 10.111.30.70 <none> 9411/TCP 70m

We can see the nodeport is 30350

1 2 3 4 % minikube --profile istio-mk ip 192.168.99.102 % curl 192.168.99.102:30350/customer customer => preference => recommendation v1 from 'f11b097f1dd0': 1

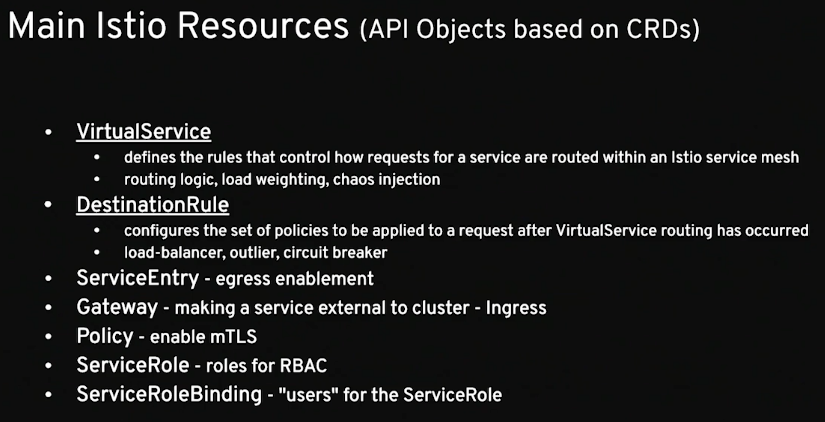

Shift traffic with VirtualService and DestinationRule Let’s deploy recommendation v2:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 apiVersion: apps/v1 kind: Deployment metadata: labels: app: recommendation version: v2 name: recommendation-v2 spec: replicas: 1 selector: matchLabels: app: recommendation version: v2 template: metadata: labels: app: recommendation version: v2 annotations: sidecar.istio.io/inject: "true" spec: containers: - env: - name: JAVA_OPTIONS value: -Xms15m -Xmx15m -Xmn15m name: recommendation image: quay.io/rhdevelopers/istio-tutorial-recommendation:v2.1 imagePullPolicy: IfNotPresent ports: - containerPort: 8080 name: http protocol: TCP - containerPort: 8778 name: jolokia protocol: TCP - containerPort: 9779 name: prometheus protocol: TCP resources: requests: memory: "40Mi" cpu: "200m" limits: memory: "100Mi" cpu: "500m" livenessProbe: exec: command: - curl - localhost:8080/health/live initialDelaySeconds: 5 periodSeconds: 4 timeoutSeconds: 1 readinessProbe: exec: command: - curl - localhost:8080/health/ready initialDelaySeconds: 6 periodSeconds: 5 timeoutSeconds: 1 securityContext: privileged: false

1 kubectl apply -f recommendation/kubernetes/Deployment-v2.yml

Then watch the curl result:

1 2 3 4 while true do curl 192.168.99.102:30350/customersleep .3 done

The output is:

1 2 3 4 5 customer => preference => recommendation v2 from '3cbba7a9cde5': 507 customer => preference => recommendation v1 from 'f11b097f1dd0': 540 customer => preference => recommendation v2 from '3cbba7a9cde5': 508 customer => preference => recommendation v1 from 'f11b097f1dd0': 541 customer => preference => recommendation v2 from '3cbba7a9cde5': 509

We scale the v2 to 2 replicas:

1 kubectl scale replicas=2 deployment/recommendation-v2

The output is below. v1: v2 is 1:2

1 2 3 4 5 6 customer => preference => recommendation v1 from 'f11b097f1dd0': 561 customer => preference => recommendation v2 from '3cbba7a9cde5': 530 customer => preference => recommendation v2 from '3cbba7a9cde5': 10 customer => preference => recommendation v1 from 'f11b097f1dd0': 562 customer => preference => recommendation v2 from '3cbba7a9cde5': 531 customer => preference => recommendation v2 from '3cbba7a9cde5': 11

Then set the v2 replicas to 1:

1 kubectl scale replicas=1 deployment/recommendation-v2

Now let’s define the DestinationRule (destination-rule-recommendation-v1-v2.yml):

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: recommendation spec: host: recommendation subsets: - labels: version: v1 name: version-v1 - labels: version: v2 name: version-v2

And define the VirtualService (virtual-service-recommendation-v2.yml):

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: recommendation spec: hosts: - recommendation http: - route: - destination: host: recommendation subset: version-v2 weight: 100

1 2 3 4 % kubectl apply -f istiofiles/destination-rule-recommendation-v1-v2.yml destinationrule.networking.istio.io/recommendation created % kubectl apply -f istiofiles/virtual-service-recommendation-v2.yml virtualservice.networking.istio.io/recommendation created

Now the curl output is:

1 2 3 customer => preference => recommendation v2 from '3cbba7a9cde5': 1298 customer => preference => recommendation v2 from '3cbba7a9cde5': 1299 customer => preference => recommendation v2 from '3cbba7a9cde5': 1300

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 % kubectl get virtualservices NAME GATEWAYS HOSTS AGE customer-gateway [customer-gateway] [*] 10d recommendation [recommendation] 115s % kubectl get destinationrules NAME HOST AGE recommendation recommendation 3m58s % kubectl describe vs recommendation Name: recommendation Namespace: istio-demo Labels: <none> Annotations: kubectl.kubernetes.io/last-applied-configuration: {"apiVersion" :"networking.istio.io/v1alpha3" ,"kind" :"VirtualService" ,"metadata" :{"annotations" :{},"name" :"recommendation" ,"namespace" :"ist... API Version: networking.istio.io/v1beta1 Kind: VirtualService Metadata: Creation Timestamp: 2020-08-02T03:35:26Z Generation: 1 Managed Fields: API Version: networking.istio.io/v1alpha3 Fields Type: FieldsV1 fieldsV1: f:metadata: f:annotations: .: f:kubectl.kubernetes.io/last-applied-configuration: f:spec: .: f:hosts: f:http: Manager: kubectl Operation: Update Time: 2020-08-02T03:35:26Z Resource Version: 8166 Self Link: /apis/networking.istio.io/v1beta1/namespaces/istio-demo/virtualservices/recommendation UID: e568bf42-8e1e-483e-aac9-102a63679547 Spec: Hosts: recommendation Http: Route: Destination: Host: recommendation Subset: version-v2 Weight: 100 #The weight Events: <none>

Let’s swift the route to v1.

1 % kubectl edit vs/recommendation

We alse can split the weight(virtual-service-recommendation-v1_and_v2.yml):

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: recommendation spec: hosts: - recommendation http: - route: - destination: host: recommendation subset: version-v1 weight: 90 - destination: host: recommendation subset: version-v2 weight: 10

1 2 3 4 5 6 7 8 9 customer => preference => recommendation v2 from '3cbba7a9cde5': 2535 customer => preference => recommendation v1 from 'f11b097f1dd0': 1246 customer => preference => recommendation v1 from 'f11b097f1dd0': 1247 customer => preference => recommendation v1 from 'f11b097f1dd0': 1248 customer => preference => recommendation v1 from 'f11b097f1dd0': 1249 customer => preference => recommendation v1 from 'f11b097f1dd0': 1250 customer => preference => recommendation v1 from 'f11b097f1dd0': 1251 customer => preference => recommendation v1 from 'f11b097f1dd0': 1252 customer => preference => recommendation v1 from 'f11b097f1dd0': 1253

If we delete the virtualservice and destinationRule, then the weight for v1 and v2 is 50%.

1 2 3 4 % kubectl delete vs recommendation virtualservice.networking.istio.io "recommendation" deleted % kubectl delete dr recommendation destinationrule.networking.istio.io "recommendation" deleted

1 2 3 4 customer => preference => recommendation v1 from 'f11b097f1dd0': 1736 customer => preference => recommendation v2 from '3cbba7a9cde5': 2654 customer => preference => recommendation v1 from 'f11b097f1dd0': 1737 customer => preference => recommendation v2 from '3cbba7a9cde5': 2655

Smart routing based on user-agent header (Canary Deployment).

Set recommendation for all v1:

1 2 kubectl create -f istiofiles/destination-rule-recommendation-v1-v2.yml kubectl create -f istiofiles/virtual-service-recommendation-v1.yml

virtual-service-recommendation-v1.yml:

1 2 3 4 5 6 7 8 9 10 11 12 13 apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: recommendation spec: hosts: - recommendation http: - route: - destination: host: recommendation subset: version-v1 weight: 100

Set Safari users to v2 ()

1 2 3 kubectl replace -f istiofiles/virtual-service-safari-recommendation-v2.yml kubectl get virtualservice

virtual-service-safari-recommendation-v2.yml:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: recommendation spec: hosts: - recommendation http: - match: - headers: baggage-user-agent: regex: .*Safari.* route: - destination: host: recommendation subset: version-v2 - route: - destination: host: recommendation subset: version-v1

Practice the mirroring and the dark luanch virtual-service-recommendation-v1-mirror-v2.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: recommendation spec: hosts: - recommendation http: - route: - destination: host: recommendation subset: version-v1 mirror: host: recommendation subset: version-v2

1 kubectl logs -f `kubectl get pods | grep recommendation-v2 | awk '{ print $1}' ` -c recommendation

Load Balancer 1 2 3 4 5 6 7 8 9 apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: recommendation spec: host: recommendation trafficPolicy: loadBalancer: simple: RANDOM